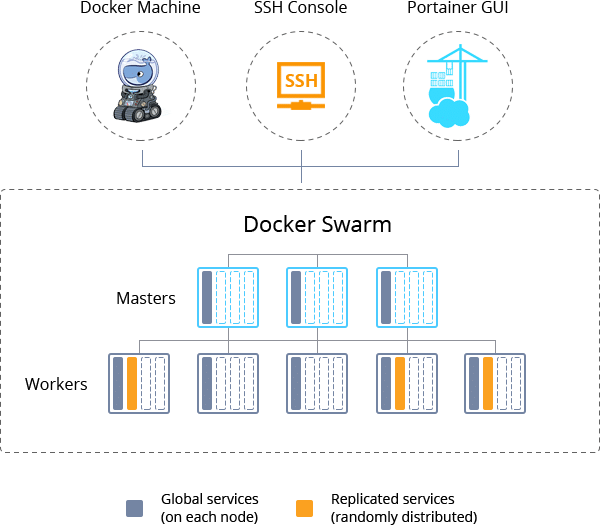

Recently, we’ve introduced the Docker Swarm Cluster package – a pre-configured automation solution, intended for creation of a number of interconnected Docker Engine nodes that are run in a swarm mode and constitute a high-available and reliable cluster. The solution provides major benefits for Docker-based application hosting, allowing to run Docker images as swarm services and easily scale them up to a desired number of replicas. This ensures high availability, fail-over protection and even workload distribution between cluster members.

In this article, we’ll provide you with an example of a service deployment to Docker Swarm cluster from a predefined docker stack yaml file and will additionally cover most of the basic actions required to manage your project.

In the light of upcoming Docker Cloud shutdown, the Jelastic out-of-box UI management panel appears to be one of the major decisive points while selecting a new platform for swarm cluster hosting.

Basic Management Operations & Service Deployment

If you don’t have a Docker Swarm cluster yet, follow the linked instruction to get it in a matter of minutes and connect to your swarm in any preferable way, e.g., access via Portainer GUI, Jelastic SSH Gate, and Docker Machine.

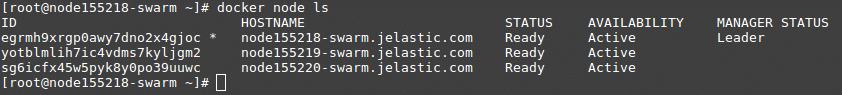

Once a connection to the Docker Swarm manager node is established (we’re working over Jelastic SSH Gate in this example), you are able to start managing with your cluster. For example, check the list of nodes your swarm cluster consists of by executing the next command:

docker node ls

As you can see, there are 1 manager and 2 worker containers in our swarm cluster. Manager nodes automatically elect a single Leader to conduct orchestration tasks, while the remaining managers (if any) are marked as Reachable to tolerate failures.

Now, let’s consider the procedure of deploying a service (both manual and automated flows) to the clustered solution.

Manual Swarm Services Deployment

You can manually run any Docker image within your cluster as a swarm process.

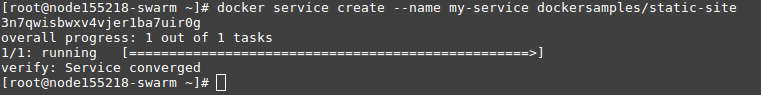

1. Use the following command to create a process (refer to the linked page to learn about possible additional options):

docker service create –name {name} {image}

Where:

- {name} – any preferable name for the process;

- {image} – any desired Docker image (e.g., dockersamples/static-site).

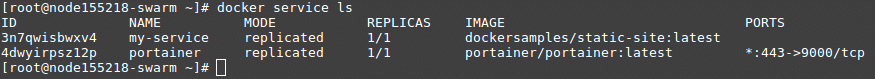

2. In a moment, check your services with the appropriate command:

docker service ls

As you can see, the added my-service process is running alongside the default portainer one (if such option was selected during swarm cluster installation).

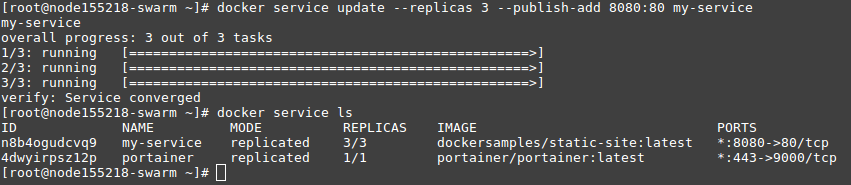

3. Let’s update our service to improve reliability through replication and, simultaneously, publish it to allow access from over the internet.

docker service update –replicas {replicas} –publish-add {ports} {service}

Where:

- {replicas} – a number of replicas to create for process;

- {ports} – two colons separated ports (i.e published – to publish service to and target – port on the deployed Docker image), e.g., 8080:80;

- {service} – name of a service to be updated.

Wait a minute for all of the replicas to set up.

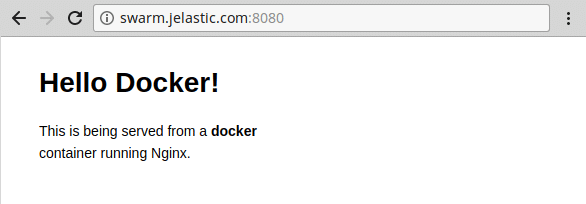

4. Now, you can access your image through the specified port (8080 in our case):

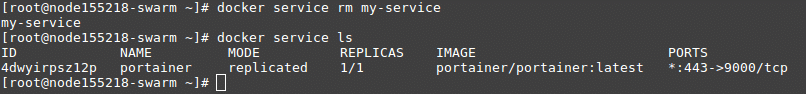

5. To remove any process enter the following command:

docker service rm {service}

That’s the basic of the swarm services management; to learn more advanced options, please refer to the official documentation.

Automated Service Deployment with Stack File

In order to automatically deploy your service, you’ll need the appropriate Docker Compose file, where all the required actions for deployment are listed.

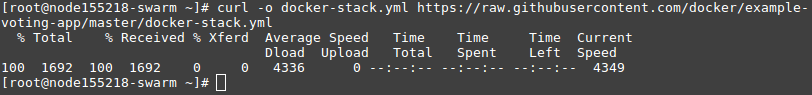

1. You can either create such stack file using any editor (e.g., vim) or fetch it from an external source (e.g., with curl).

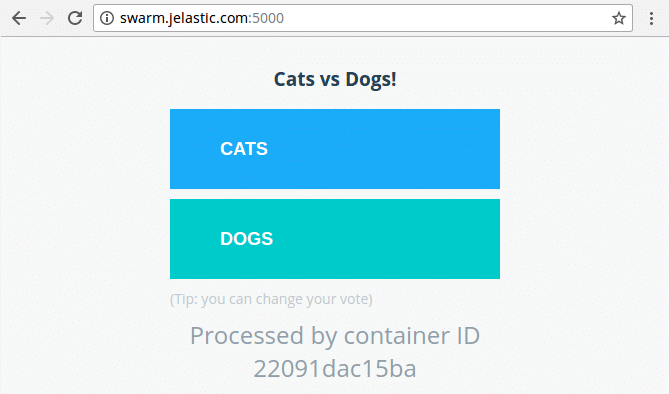

In our case, we’ve downloaded stack sources for the example of voting application, that will help to find out whether point A (Cats) is more popular than point B (Dogs) according to the real user choices. The result of such voting will be displayed as a relative percentage of voters preferences.

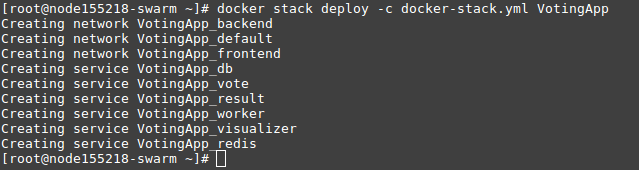

2. To deploy your app, use the following command and provide the stack file:

docker stack deploy -c {compose-file} {name}

Where:

- {compose-file} – the file you’ve prepared in the previous step (docker-stack.yml in our case);

- {name} – any preferred name (for example, VotingApp).

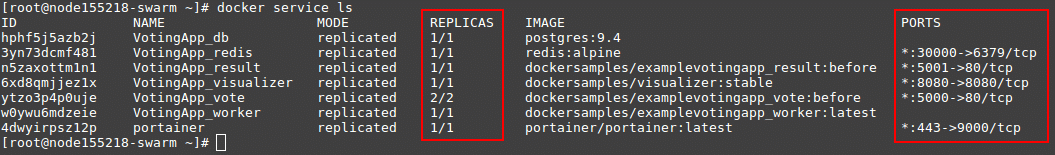

3. Now, let’s check the running services on the swarm cluster with:

docker service ls

As you can see, all of the services specified in the docker stack file are already started (wait for a minute if some REPLICAS are not up yet). Also, within the PORTS column, you can find the port number a particular service is run at (e.g., 5000 for voting and 5001 for results output in our case).

4. Finally, access your service via browser and add the appropriate port (if needed) to the address:

In such a way, you get an extra service reliability with automatically ensured failover protection and even workloads distribution when hosting your dockerized services within Docker Swarm cluster. And Docker Swarm solution, pre-packaged by Jelastic for one-click installation, provides you all of the above-mentioned benefits in a matter of minutes.

Tip: For the better understanding of the Docker Engine and Docker Swarm Cluster packages’ implementation, refer to the corresponding articles:

Hopefully, this article brings use for you. Try out your services deployment to Docker Swarm cluster hosted for free at our Jelastic PaaS platform.